Like many Linux and BSD nerds everywhere, I occasionally have the need to download a package or two. Often times this is a one off: An ubuntu 18:10 desktop that is a relative snowflake amongst the fleet of Ubuntu 18:04 laptops and Centos 7 servers, or the odd experimental deviation into Solus OS. These things happen, and in those scenarios, there is no optimisation to downloading a package apart from finding a speedy or geographically relevant mirror.

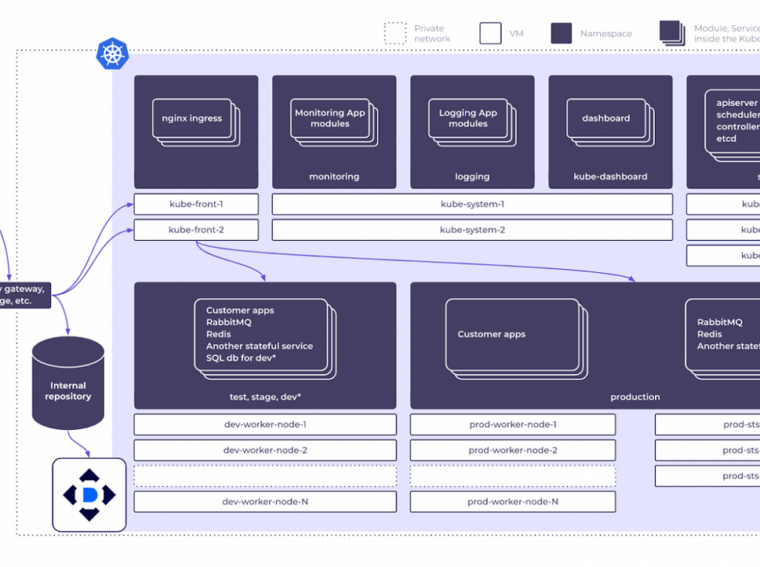

But sometimes you find your self attempting (or planning) to download the same package over and over again. It may be that kubernetes cluster you’re experimenting with – 12vm’s running a bog standard version of your favourite host OS, or sometimes even the frivolous download and update of slightly different Ubuntu flavour (because we find that sort of thing fun). In that case, having a copy of a package available on your local network will greatly increase the download time, and save some bandwidth on an internet connection already groaning under the weight of Netflix and a couple of remote Citrix sessions.

So the question is, how do you find an easy and transparent way of ‘caching’ a package locally, such that a machine needing it will find it without a massive configuration overhead. There are a couple of options:

- An enterprise type solution like Satellite (or SpaceWalk, the upstream open source project). A problem with approaches like this is that they tend to want to synchronise the entire upstream repository. This could be several hundreds of gigs, that you’ll have to somehow keep up to date. This is slow, requires a lot of storage and is quite inefficient, as you’re downloading and storing packages that you’ll never need.

- An ‘off the shelf’ pass through caching solution e.g. apt-cache or Nexus OSS set up with some YUM proxy repos. These are better. They are more lightweight in terms of storage as they will only download a source package once. The overhead that these options have is around configuration: you’re inserting these caches in between YUM or APT and the server that has the packages. This means

- you’ve got to set up the caching server to aware of a particular upstream e.g. ie.ubuntu.com. If you add another repository, you’ve got to go back to your cachnig server, and make sure it knows about that repository.

- you’ve got to update your client config to reference the caching server. Your sources.list can’t reference ie.ubuntu.com anymore – it has to go to your caching server to request packages. Again, once it’s set up, there’s no problem. But you won’t realise any benefit from your caching solution until you’ve got this config in place on all your potential clients. And – irritatingly, if you’re setting this up on a laptop that might operate away from your home caching server, you might find yourself switching between a proxied config and a non-proxied config, and the more complex this config change is, the more error prone this will be.

There are heaps of tools that fall into this category:

- Nexus OSS – has built in support for RPM and a community plugin for APT. Runs as a java process, so it’s a little more memory hungry.

- Apt cacher and Apt Cacher NG – These seem to be smart enough to prevent a rewrite of your repo definitions.

- Squid deb proxy – seems to be some prepackaged config for squid to achieve a reasonable caching strategy.”

- A more generic network proxy configured to hold onto packages via a specifically configured proxy. This is a little harder to get right as the configuration of a proxy package such as squid is deliberately high level – it’s set in terms of networks, acls and a language to describe any HTTP or FTP (or Gopher?) traffic. But the payoff is great: you can tell it to store things ending .rpm or .apt with a special policy that keeps these files hanging around for a long time. This is generic enough that you don’t need to call out specific upstreams. Client side configuration is limited to 2 steps:

- Enable a generic http or https proxy

- Switch off dynamic mirror selection – this is important as the cache uses the hostname, the path and the name of the file as a cache key. A file downloaded from ie.ubuntu.com won’t be cache if you try and subsequently download it from uk.ubuntu.com.

So with a bit of google I found a couple of good steers. I ultimately went for a mix of the two solutions described below:

- midnightfreddie.com – Sets up a good cache for Debian and Ubuntu repos

- Lazy distro mirrors – similar approach for RPM, but also experiments with web accelerator mode and treats upstreams as cache-peers. The downside of this is that you’re back to nominating specific upstreams.

As always, start in jail

This will be hosted on the FreeNAS box, and will take advantage of the fact that it’s on most of the time and has storage to spare.

iocage create -n proxy dhcp=on allow_sysvipc=1 bpf=yes vnet=on -t ansible_template_v2 boot="on"

iocage fstab -a proxy /mnt/vol01/apps/proxy/cache /var/squid/cache nullfs rw 0 0

iocage fstab -a proxy /mnt/vol01/apps/proxy/logs /var/log/squid nullfs rw 0 0

Note there that I have externalized the logs directory and the cache directory into my generica ‘apps’ dataset.

The above configuration will also allow me to reference the proxy as ‘proxy’ or ‘proxy.home’ thanks to the DHCP config and the PiHole

Install squid

Super easy:

Get configuring

Crack open /usr/local/etc/squid.conf:

#listen on port 80 because I always forget the other one

http_port 80 # Create a disk-based cache of up to 20GB in size:

cache_dir ufs /var/squid/cache 20000 16 256 cache_replacement_policy heap LFUDA # Default is 4mb, but that won't do for packages

maximum_object_size 10096 MB refresh_pattern -i .rpm$ 129600 100% 129600 refresh-ims override-expire

refresh_pattern -i .iso$ 129600 100% 129600 refresh-ims override-expire

refresh_pattern -i .deb$ 129600 100% 129600 refresh-ims override-expire

refresh_pattern ^ftp: 1440 20% 10080

refresh_pattern ^gopher: 1440 0% 1440

refresh_pattern -i (/cgi-bin/|\?) 0 0% 0

refresh_pattern . 0 20% 4320

The important bits there:

- cache_replacement_policy heap LFUDA – Least frequently used with Dynamic again. This is a deviation from LRU (Least recently used), which keeps objects based on how recently they’ve been hit. LFUDA optimizes for byte hits rather than hits by keeping bigger (potentially less popular) objects around.

- maximum_object_size 4096 MB – increase the maximum size of the objects in the cache. Import given the above change, and also as package sizes can vary dramatically, and a great many of them will be above the 4mb limit that’s the default.

- refresh_pattern: (https://ma.ttwagner.com/lazy-distro-mirrors-with-squid/)[Matt Wagner] made me laugh with his discription of ‘do unholy things to the refresh patterns’. Yes – you have to break all sorts of ‘rules’, possible ones that would make proxy admins everywhere have convulsions. But this proxy is being tailored for a VERY specific purpose

- -i .rpm$ – Case insensitive match to things ending in .rpm

- 129600 100% 129600 – min, percent, max – i.e. keep objects around for 90 days. These usually govern objects that don’t have an explicit expiry time set.

- refresh-ims override-expire – refresh-ims makes squid check with the backend server when someone does a conditional get, and override-expire enforces min age even if the server sent an explicit expiry time. According to the squid docs, the latter violates an HTTP standard, but again, this is not a general purpose proxy server.

On Ubuntu – add the following to /etc/apt/apt.conf.d/00proxy.conf:

Acquire::http::proxy "http://proxy.home:80";

Acquire::https::proxy "http://proxy.home:80";

On Centos7 – add the following in the [main] section:

proxy=http://proxy.home:80

Seeing it action

The miss 1543625283.161 2996 192.168.0.36 TCP_MISS/200 104528 GET http://mirror.centos.org/centos/7/os/x86_64/Packages/python-urllib3-1.10.2-5.el7.noarch.rpm - HIER_DIRECT/88.150.173.218 application/x-rpm The hit 1547591894.456 0 192.168.0.98 TCP_HIT/200 104525 GET http://mirror.centos.org/centos/7/os/x86_64/Packages/python-urllib3-1.10.2-5.el7.noarch.rpm - HIER_NONE/- application/x-rpm

1547591894.461 0 192.168.0.218 TCP_HIT/200 104525 GET http://mirror.centos.org/centos/7/os/x86_64/Packages/python-urllib3-1.10.2-5.el7.noarch.rpm - HIER_NONE/- application/x-rpm

We’ve had to do some unholy things, but those have been limited in scope. So it’s more a ‘targeted policy of unholiness’ for packages, while the rest of the internet skates by fairly unscathed. So far, the only thing that I’ve found that doesn’t like making connections through the proxy is the Linux Discord app. I’m not sure if that’s related to the LFUDA pattern, or some sort of leakage through to one of the more aggressive refresh patterns.

This is far from a proxy-panacea, but it keeps the Netflix stream running and my wife happily watching ‘Say yes to the dress’. This is a good thing.